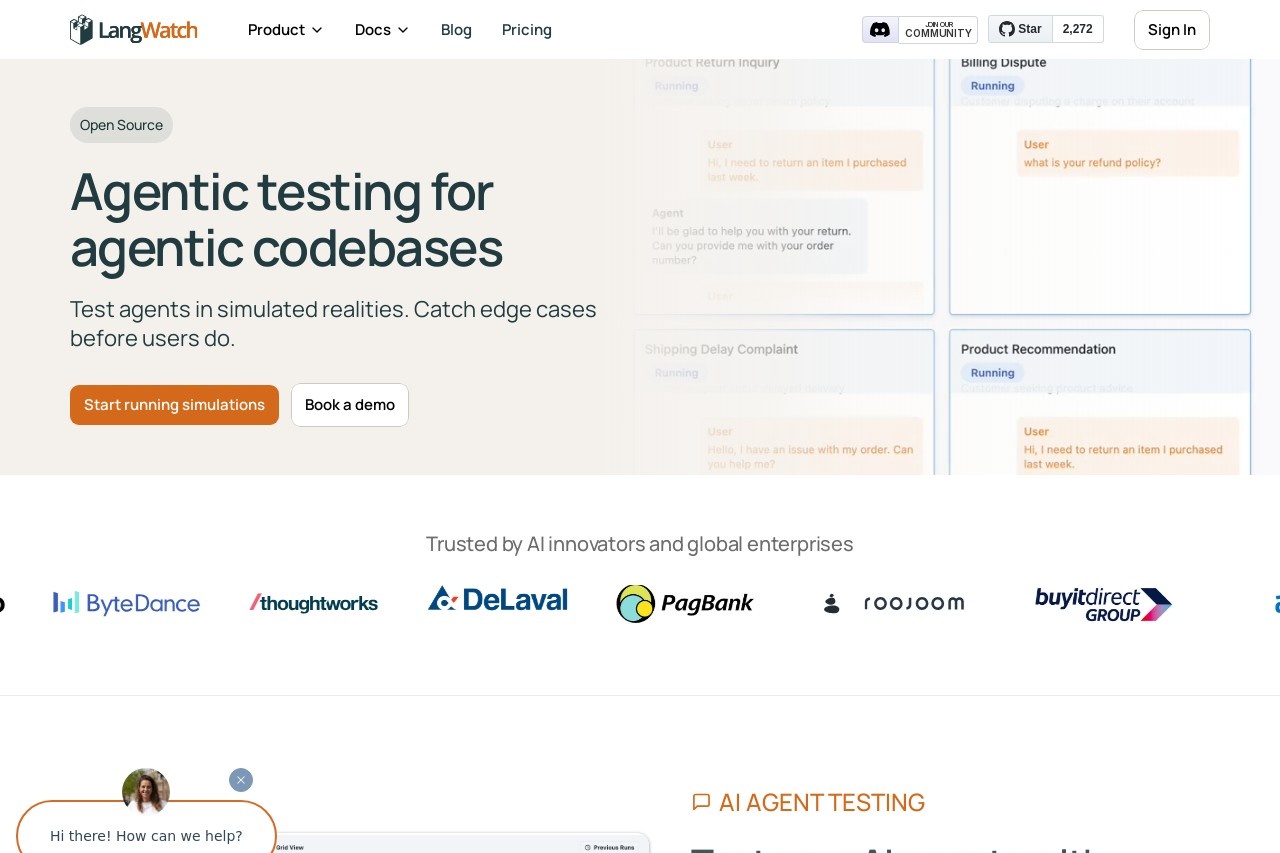

LangWatch is a platform for AI agent testing and LLM evaluation.

LangWatch

LangWatch: A Platform for AI Agent Testing and LLM Evaluation

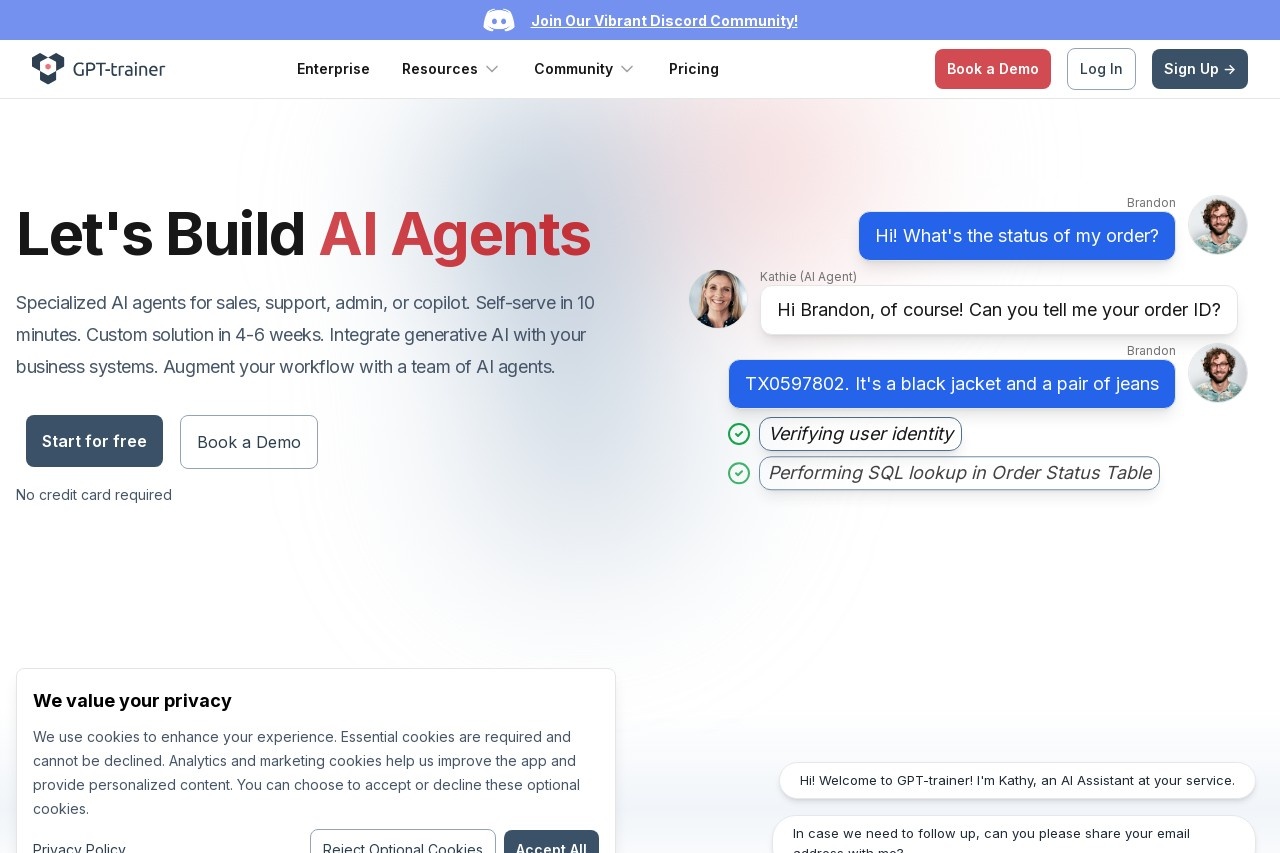

LangWatch is an innovative platform designed to streamline the testing and evaluation of AI agents and large language models (LLMs). Whether you're a developer, researcher, or business leveraging AI, LangWatch provides the tools to assess performance, accuracy, and reliability in a structured environment.

Key Features of LangWatch

- Comprehensive Testing Framework: Evaluate AI agents across multiple metrics, including response quality, coherence, and task completion.

- LLM Benchmarking: Compare different language models to identify strengths and weaknesses for specific use cases.

- Customizable Workflows: Tailor tests to your requirements, from simple Q&A to complex multi-step interactions.

- Real-time Analytics: Gain insights through detailed reports and visualizations to track improvements over time.

Why Choose LangWatch?

As AI systems become more sophisticated, ensuring their reliability is critical. LangWatch simplifies this process by offering:

- Scalability: Test models at any scale, from small prototypes to enterprise-level deployments.

- User-Friendly Interface: Intuitive dashboards make it easy to set up and monitor evaluations.

- Collaboration Tools: Share results and collaborate with teams to refine AI performance.

Use Cases

LangWatch is versatile and supports a wide range of applications, including:

- Chatbot development and optimization

- Content generation quality assessment

- Bias and fairness testing in AI responses

- Automated customer support evaluation

By integrating LangWatch into your AI development pipeline, you can ensure your models meet high standards of performance and deliver consistent, trustworthy results.