BAGEL

BAGEL: An Open-Source Unified Multimodal Model

BAGEL is an innovative open-source framework designed to unify multimodal artificial intelligence (AI) capabilities. By integrating diverse data types—such as text, images, audio, and video—into a single cohesive model, BAGEL simplifies complex AI workflows while maintaining high performance and flexibility.

Key Features of BAGEL

- Multimodal Integration: Seamlessly processes and correlates data across multiple formats, enabling richer context understanding.

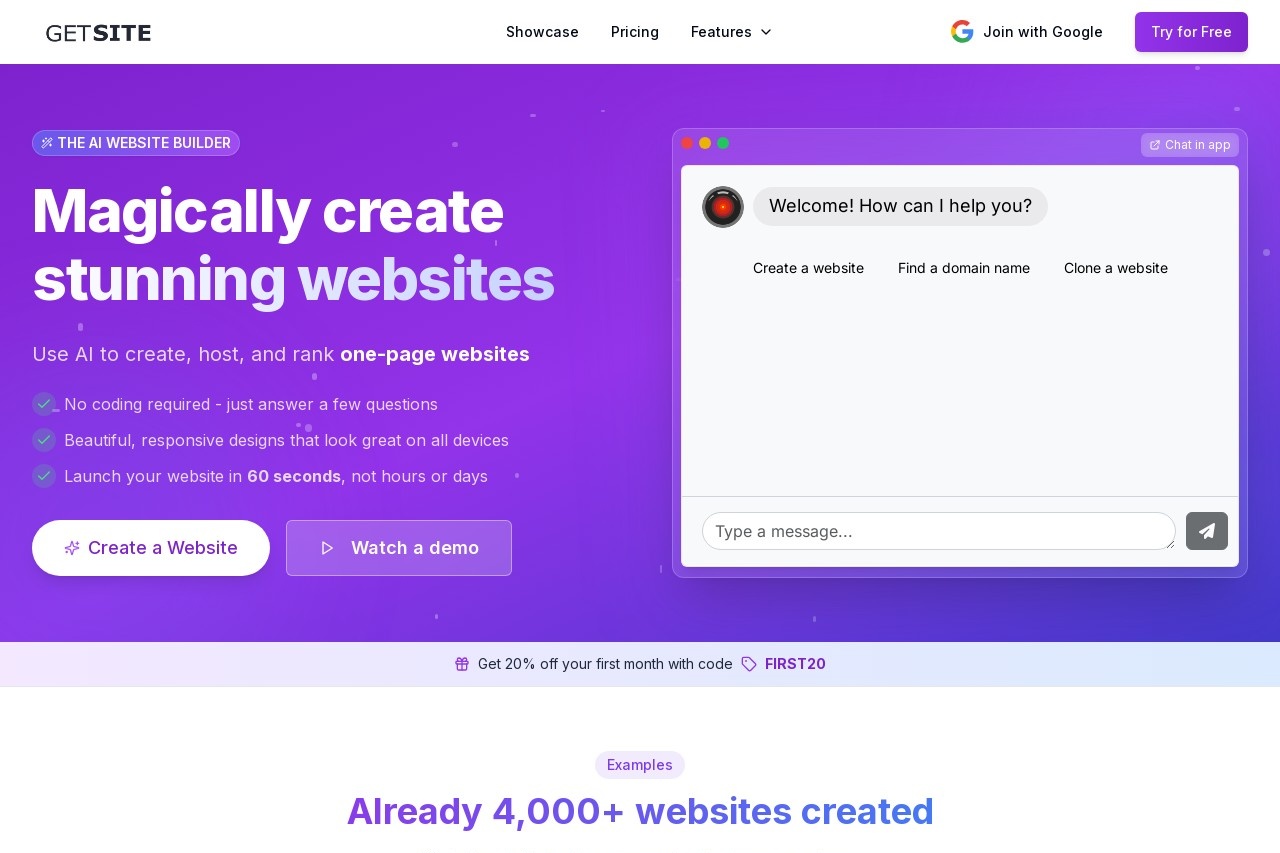

- Open-Source Accessibility: Freely available for researchers and developers to modify, extend, and deploy in diverse applications.

- Scalability: Supports both small-scale experiments and large-scale industrial deployments.

- Community-Driven: Encourages collaboration through transparent development and shared improvements.

Applications of BAGEL

BAGEL's unified approach makes it ideal for cutting-edge AI applications, including:

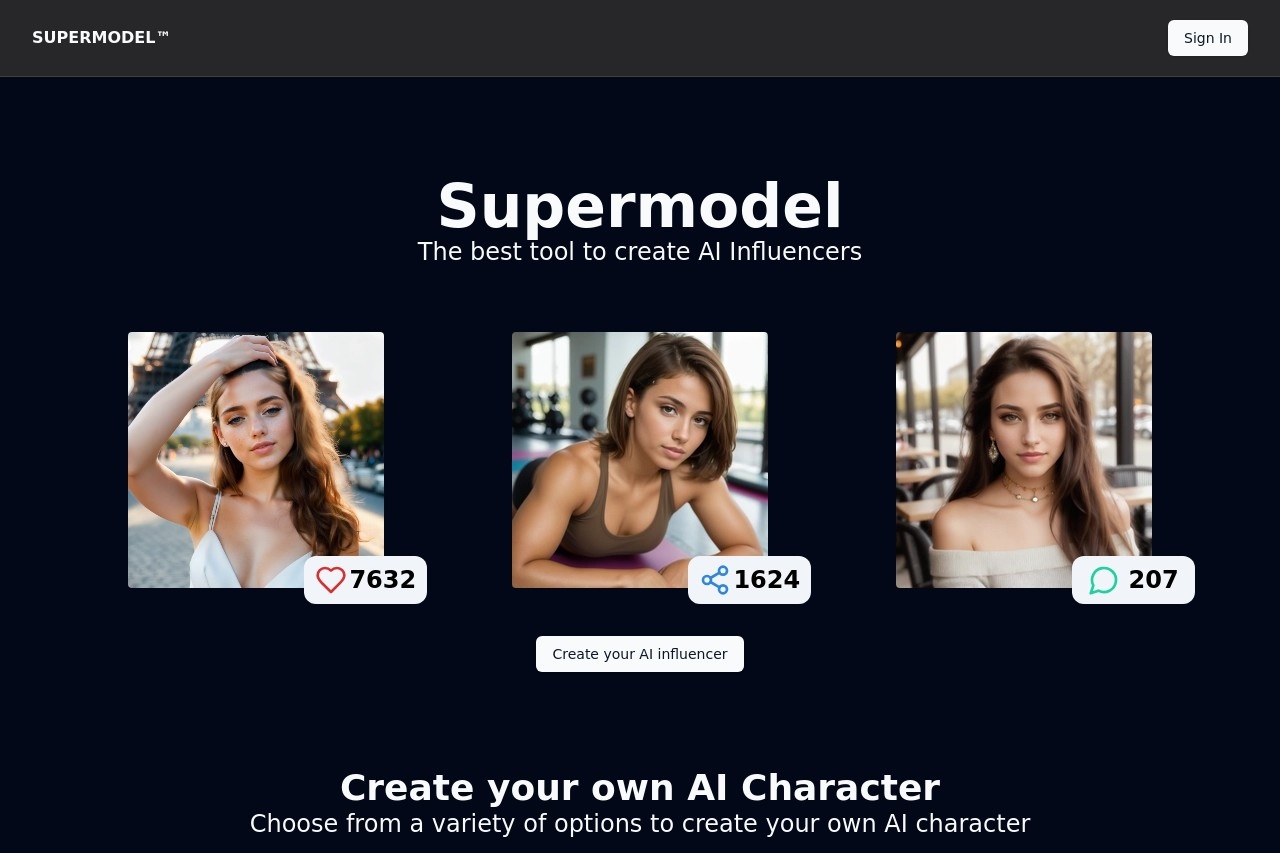

- Content Generation: Creating multimedia content (e.g., text-to-image synthesis or video captioning).

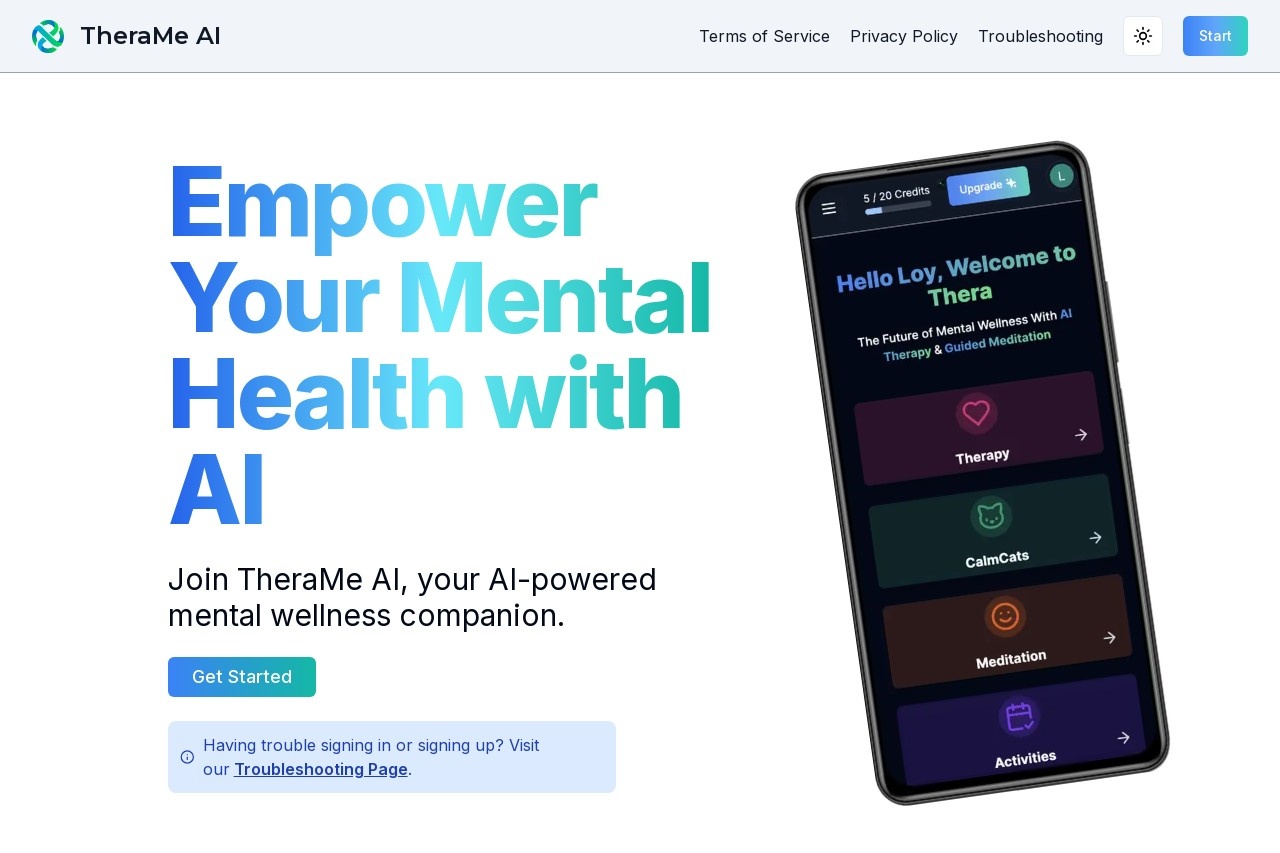

- Human-Computer Interaction: Enhancing chatbots and virtual assistants with multimodal inputs (voice + text + visuals).

- Healthcare: Analyzing medical reports, scans, and patient records for comprehensive diagnostics.

Why Choose BAGEL?

Unlike proprietary models, BAGEL prioritizes adaptability and inclusivity. Its open-source nature allows developers to:

- Customize the model for niche use cases without licensing restrictions.

- Integrate with existing tools and frameworks (e.g., PyTorch, TensorFlow).

- Contribute to a growing ecosystem of multimodal AI solutions.

As AI continues to evolve, BAGEL represents a significant step toward democratizing advanced multimodal technologies. Whether for research or commercial projects, it offers a robust foundation for innovation.