Fireworks AI offers high-speed inference for open-source LLMs and image models, with free fine-tuning and deployment options.

Fireworks AI

Fireworks AI: High-Speed AI Inference Platform

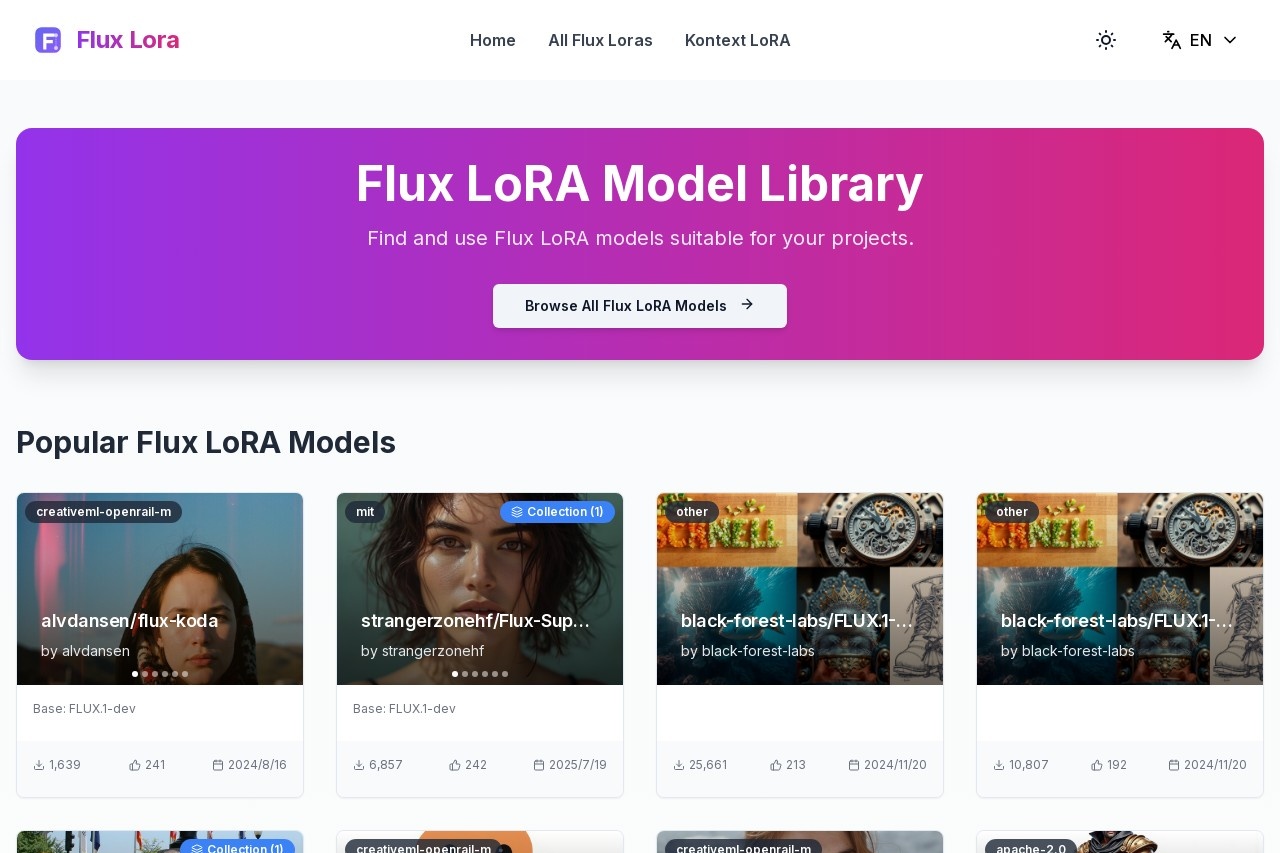

Fireworks AI is a cutting-edge platform designed to accelerate the deployment and inference of open-source large language models (LLMs) and image generation models. By focusing on speed, efficiency, and accessibility, it empowers developers and businesses to harness the power of AI without compromising performance.

Key Features

- Blazing Fast Inference: Optimized infrastructure delivers low-latency responses for real-time applications

- Open-Source Model Support: Works seamlessly with popular community models like LLaMA, Mistral, and Stable Diffusion

- Free Fine-Tuning: Customize models for your specific use case without additional costs

- Flexible Deployment: Choose between cloud-based solutions or on-premises implementations

- Developer-Friendly API: Simple integration with existing applications and workflows

Why Choose Fireworks AI?

Unlike many AI platforms that lock users into proprietary models, Fireworks AI embraces the open-source ecosystem while solving the critical challenges of production deployment. The platform's architecture is specifically engineered to handle the computational demands of modern AI models, offering:

- 3-5x faster inference speeds compared to standard implementations

- Automatic scaling to handle traffic spikes

- Enterprise-grade reliability with 99.9% uptime SLA

Getting Started

Fireworks AI offers a generous free tier that allows developers to experiment with model fine-tuning and deployment. The platform provides comprehensive documentation, code samples, and a supportive community to help users quickly implement AI solutions for:

- Chat applications and virtual assistants

- Content generation and summarization

- Image creation and editing tools

- Data analysis and insight generation

As AI adoption grows across industries, Fireworks AI positions itself as the performance-optimized bridge between open-source innovation and real-world applications.