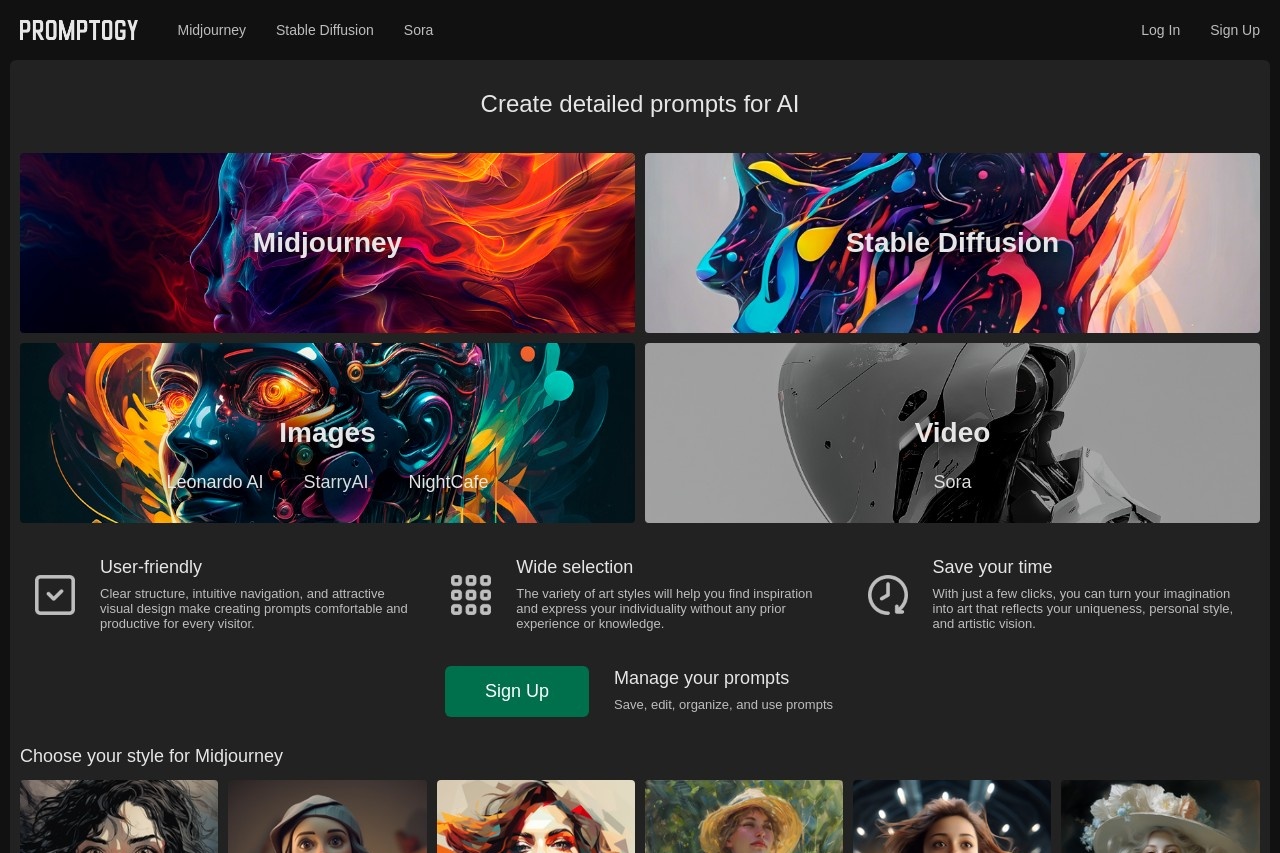

A free online deep learning model for text-to-image generation.

Stable Diffusion

Stable Diffusion: A Free Online Deep Learning Model for Text-to-Image Generation

Stable Diffusion is a cutting-edge deep learning model designed to generate high-quality images from textual descriptions. As an open-source project, it has gained widespread popularity for its ability to create realistic and artistic visuals based on simple text prompts. This technology represents a significant leap forward in the field of artificial intelligence and computer vision.

How Stable Diffusion Works

The model operates through a sophisticated process called diffusion, where it:

- Starts with random noise and gradually refines it

- Uses text embeddings to guide the image generation

- Iteratively improves the output through multiple steps

- Leverages a large dataset of image-text pairs for training

Key Features

- Free to use: Available as open-source software

- High-quality outputs: Generates detailed, realistic images

- Customizable: Allows control over style and composition

- Efficient: Runs on consumer-grade hardware

- Versatile: Supports various artistic styles and subjects

Applications

Stable Diffusion has numerous practical applications across different industries:

- Concept art and illustration for games and films

- Marketing and advertising content creation

- Educational materials and visual aids

- Product design and prototyping

- Personal creative projects and digital art

Getting Started

To begin using Stable Diffusion, users can access web-based implementations or download the model to run locally. The basic workflow involves:

- Entering a descriptive text prompt

- Adjusting parameters like image size and detail level

- Generating multiple variations

- Refining the output through iterative prompting

As the technology continues to evolve, Stable Diffusion is pushing the boundaries of what's possible in AI-assisted creativity, making professional-quality image generation accessible to everyone.

Stable Diffusion: AI-Powered Image Generation

Stable Diffusion is an advanced artificial intelligence tool designed to generate high-quality, copyright-free image variations based on user inputs. This groundbreaking technology leverages deep learning models to create unique visual content from text prompts or existing images.

Key Features

- Generates multiple image variations from a single input

- Produces copyright-free output suitable for commercial use

- Works with both text descriptions and image inputs

- Offers customizable parameters for style and composition

- Runs efficiently on consumer-grade hardware

How It Works

The system uses a diffusion model that gradually transforms random noise into coherent images through an iterative refinement process. When given a text prompt, the AI interprets the description and generates corresponding visual elements, maintaining semantic consistency throughout the creation process.

Practical Applications

- Concept art and illustration generation

- Marketing and advertising content creation

- Product design prototyping

- Educational and research visualizations

- Creative brainstorming and inspiration

Technical Advantages

Unlike earlier image generation systems, Stable Diffusion operates with remarkable efficiency. The model's compressed latent space representation allows it to produce high-resolution images without requiring excessive computational resources. This makes the technology accessible to individual creators and small businesses without specialized hardware.

As AI-generated art continues to evolve, Stable Diffusion represents a significant leap forward in democratizing creative tools while maintaining high standards of output quality and versatility.

Stable Diffusion: AI-Powered Image Generation

Stable Diffusion is a cutting-edge platform that leverages artificial intelligence to generate high-quality images from text prompts. This revolutionary technology enables users to create stunning visuals simply by describing their ideas in natural language.

How It Works

The system utilizes a deep learning model trained on vast datasets of images and their corresponding descriptions. When you input a text prompt, the AI:

- Analyzes your description to understand the requested content

- References its knowledge of visual concepts and styles

- Generates a unique image that matches your specifications

- Refines the output through multiple iterations for optimal quality

Key Features

- Text-to-Image Conversion: Transform written descriptions into visual art

- Style Adaptation: Create images in various artistic styles (realistic, cartoon, watercolor, etc.)

- Customization Options: Adjust parameters like resolution, aspect ratio, and detail level

- Rapid Iteration: Generate multiple variations quickly to explore creative possibilities

Applications

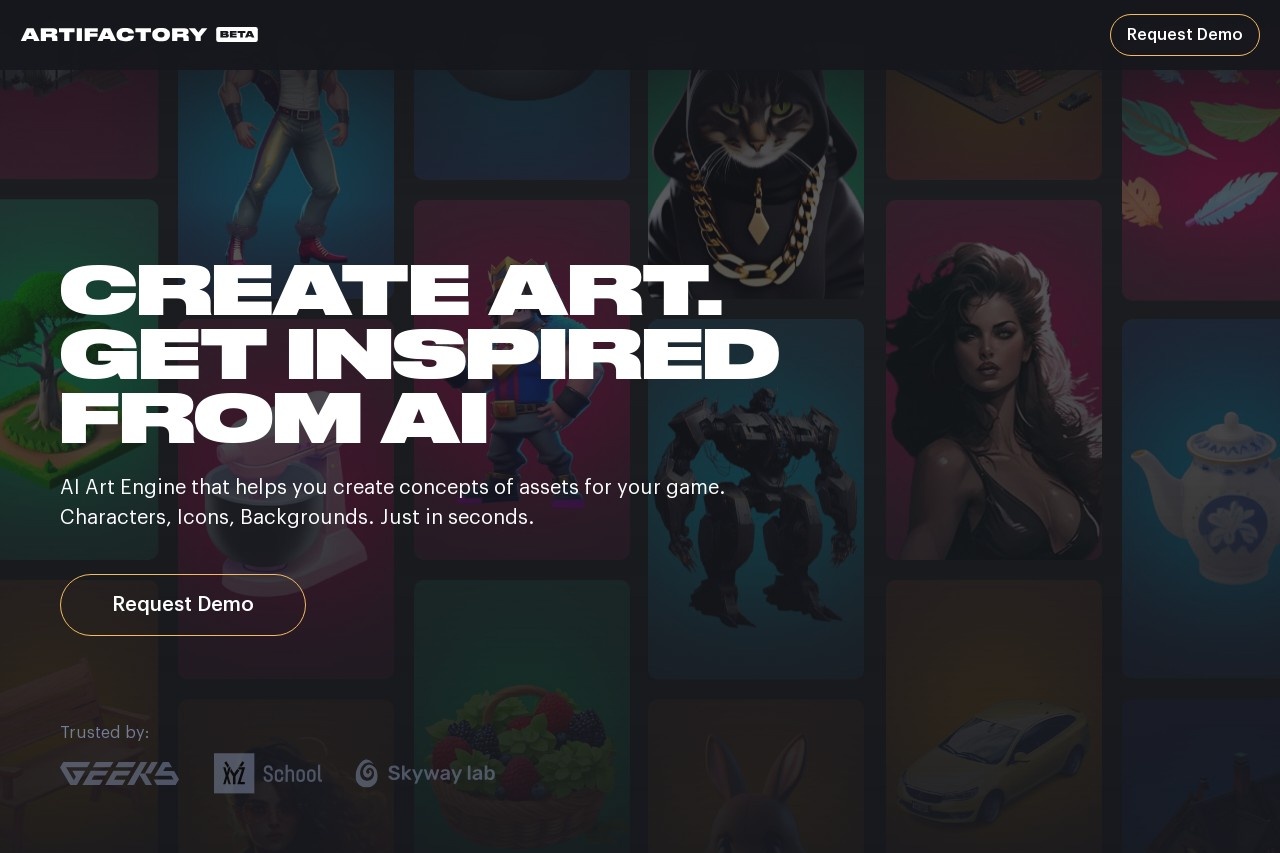

Stable Diffusion technology has numerous practical applications across industries:

- Creative Professionals: Concept artists, designers, and marketers use it for rapid prototyping

- Education: Visual aids for complex concepts in teaching materials

- Entertainment: Storyboarding and pre-visualization for films and games

- E-commerce: Product visualization and advertising content creation

Ethical Considerations

While powerful, the technology raises important questions about:

- Copyright and intellectual property rights

- Potential misuse for creating misleading content

- The impact on traditional creative professions

Responsible platforms implement safeguards and encourage ethical usage guidelines.

As AI image generation continues to evolve, Stable Diffusion represents a significant leap forward in making creative tools accessible to everyone, while challenging us to reconsider the boundaries between human and machine creativity.

Stable Diffusion: A Free Online Deep Learning Model for Text-to-Image Generation

Stable Diffusion is a groundbreaking deep learning model that transforms text descriptions into high-quality images. As an open-source and free-to-use tool, it has democratized AI-powered art creation, enabling anyone to generate visuals simply by typing descriptive prompts.

How Stable Diffusion Works

This innovative model operates through a sophisticated process:

- Text Encoding: Converts your written prompt into numerical representations

- Diffusion Process: Gradually refines random noise into coherent images

- Image Decoding: Transforms the processed data into final visual output

Key Features

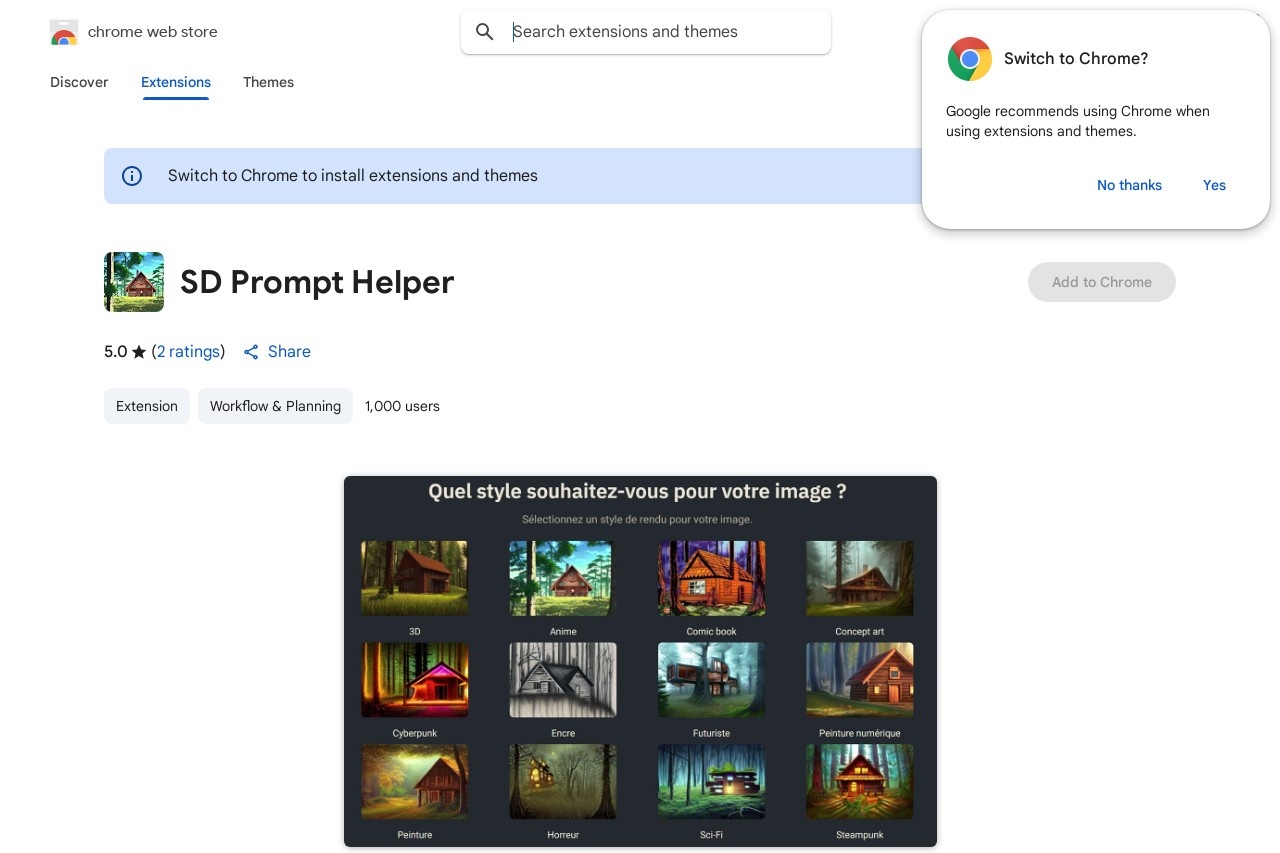

- Generates images in various styles (realistic, anime, painting, etc.)

- Supports detailed prompt engineering for precise results

- Runs efficiently on consumer-grade hardware

- Offers customization through parameters like seed values and sampling steps

Applications

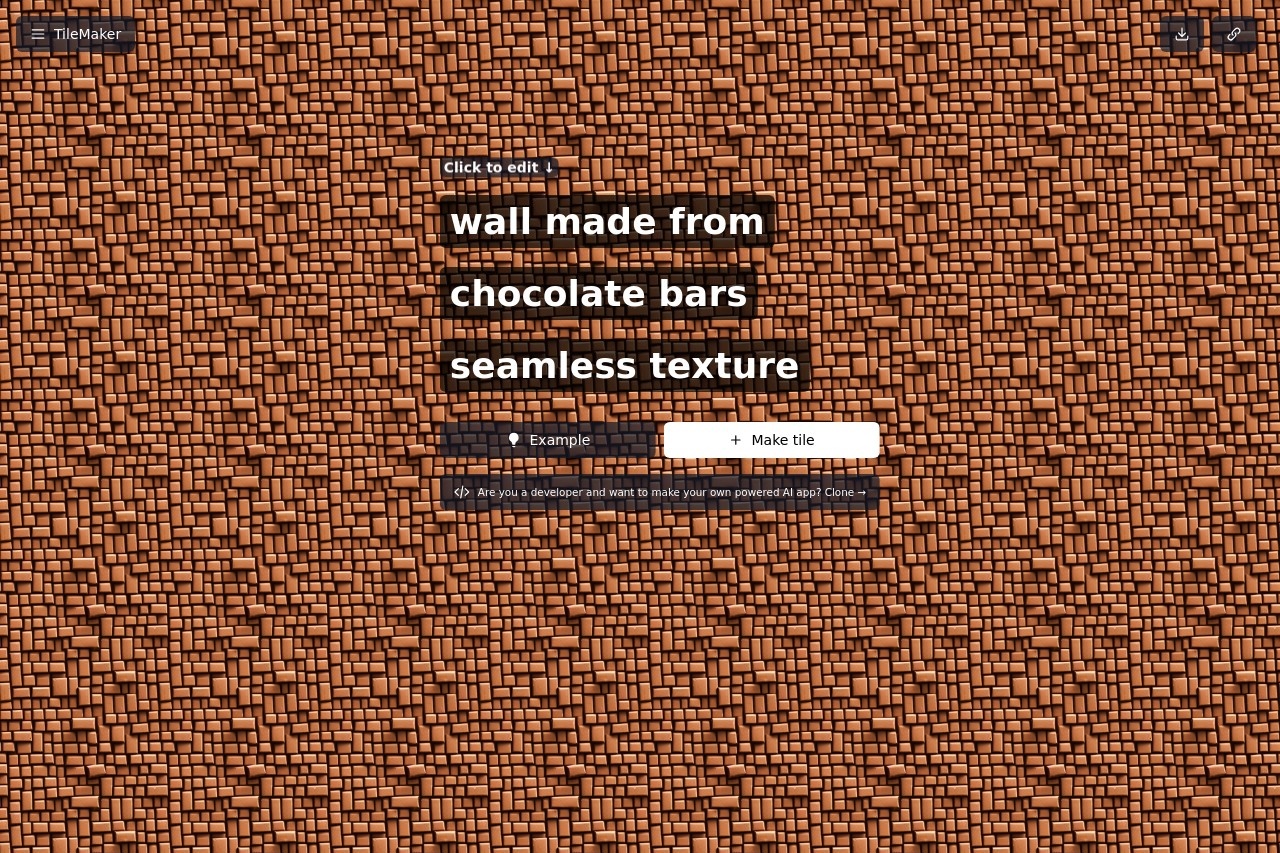

Stable Diffusion has found diverse applications across multiple fields:

- Creative Arts: Concept art, illustrations, and digital paintings

- Marketing: Quick visual prototyping for campaigns

- Education: Visualizing complex concepts for better understanding

- Product Design: Rapid iteration of design concepts

Getting Started

To begin using Stable Diffusion:

- Access a web-based interface or install locally

- Type your desired image description in the prompt box

- Adjust parameters (optional) for different results

- Generate and refine your images

While powerful, users should be mindful of ethical considerations regarding copyright and appropriate content generation when using this technology.