Bolt Foundry

Bolt Foundry: Unit Testing for LLMs

Bolt Foundry is a specialized platform designed to provide comprehensive unit testing solutions for Large Language Models (LLMs). As LLMs become increasingly integral to modern applications, ensuring their reliability and accuracy through rigorous testing is essential. Bolt Foundry addresses this need by offering tailored testing frameworks that help developers validate model performance at the granular level.

Why Unit Testing Matters for LLMs

Unit testing is a critical step in the development lifecycle of any software, including LLMs. It involves testing individual components or functions of a model to ensure they behave as expected. For LLMs, this might include:

- Input Validation: Verifying how the model processes different types of prompts.

- Output Consistency: Checking if responses remain accurate across multiple runs.

- Edge Case Handling: Ensuring the model handles unusual or unexpected inputs gracefully.

- Bias Detection: Identifying and mitigating biased outputs early in development.

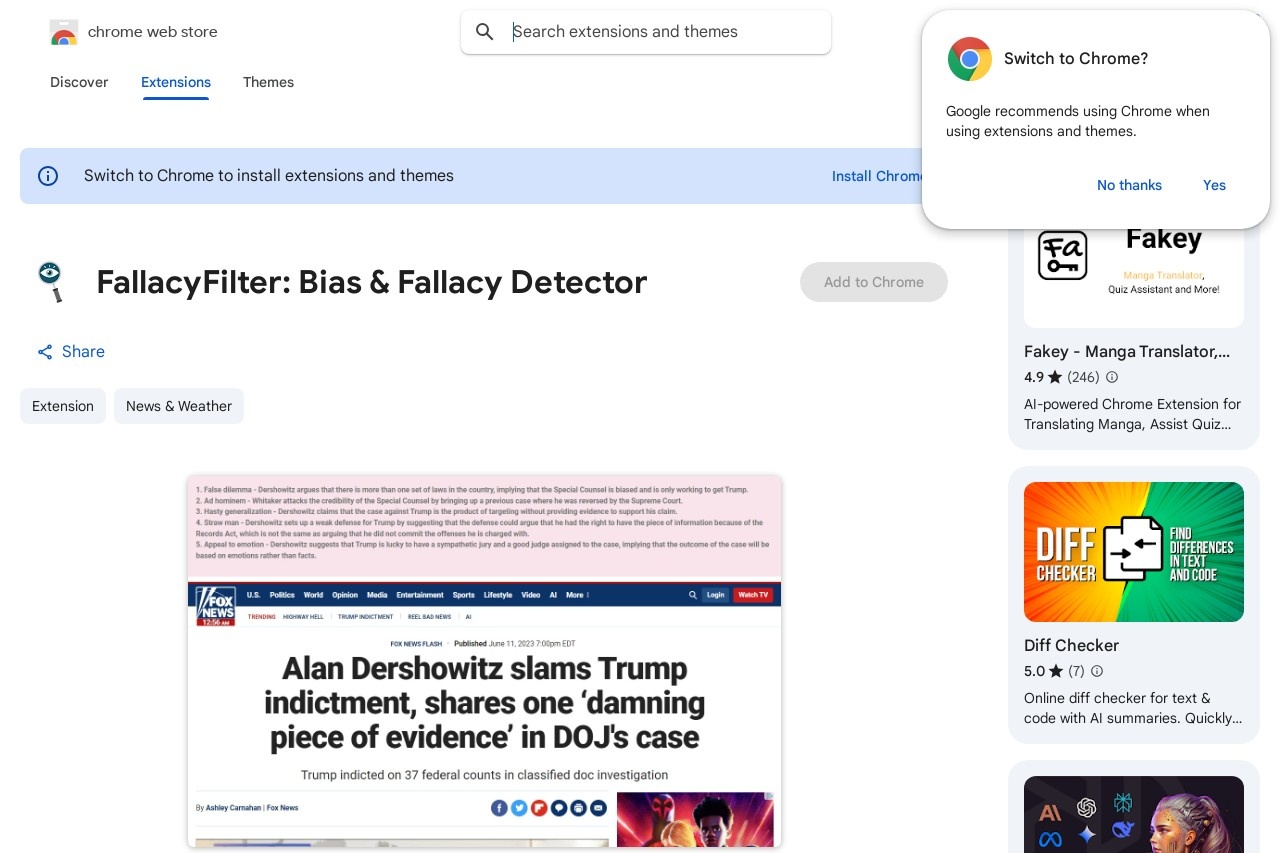

Key Features of Bolt Foundry

Bolt Foundry simplifies the testing process with the following capabilities:

- Automated Test Generation: Quickly creates test cases based on model specifications.

- Customizable Metrics: Allows developers to define success criteria for their specific use cases.

- Integration Support: Works seamlessly with popular LLM frameworks like OpenAI, Hugging Face, and others.

- Real-Time Feedback: Provides instant insights into model performance during development.

Who Can Benefit from Bolt Foundry?

Bolt Foundry is designed for a wide range of users, including:

- AI Researchers: To validate new model architectures or fine-tuning techniques.

- Software Engineers: To integrate LLMs into applications with confidence.

- Quality Assurance Teams: To ensure models meet production standards before deployment.

By leveraging Bolt Foundry, teams can reduce development risks, improve model reliability, and accelerate the deployment of high-quality LLM-powered solutions.